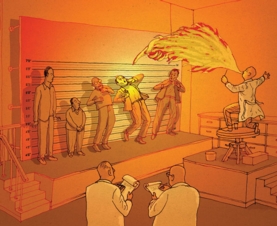

CAN WE TRUST CRIME FORENSICS? (Scientific American)

See also the SALLY CLARK CASE

Here is an excerpt from Chapter 6 of my Personal History of Bayesian Statistics (2014):

A leading UCL Bayesian was very much involved in the eventually very tragic 1999 Sally Clark case, when a 35 year old solicitor was convicted of murdering her two babies, deaths which had originally been attributed to sudden infant death syndrome. |

| Sally Clark |

Professor Philip Dawid, an expert called by Sally Clark’s team during the appeals process pointed out that applying the same flawed method to the statistics for infant murder in England and Wales in 1996 could suggest that the probabilities of two babies in one family being murdered was 1 in 2,152,224,291 (that is 1 in 2152 billion) a sum even more outlandish than 1 in 73 million (as suggested by the highly eminent prosecution expert witness Professor Sir Roy Meadows, when the relevant probability was a 2 in 3 chance of innocence. Sir Roy was later disbarred as a medical practitioner, but was reinstated upon appeal. Sally Clark committed suicide after being released from prison following several appeals.

|

Here is another excerpt from the same chapter:

The Bayesian history of the twentieth century would not, however, be complete without a discussion of the ways misguided versions of Bayes theorem were misused in the O.J. Simpson murder and Adams rape trials during attempts to quantify DNA evidence in the Courtroom.

Suppose more generally that some traces of DNA are found at a crime scene, and that a suspect is subsequently apprehended and charged. Then it might be possible for the Court to assess the ‘prior odds’ Φ/ (1-Φ) that the trace came from the DNA of the defendant, where the prior probability Φ refers to the other, human, evidence in the case. Then according to an easy rearrangement of Bayes theorem, the ‘posterior odds of a perfect match’ multiplies the prior odds by a Bayes factor R which is a ‘measure of evidence’ in the sense that it measures the information provided by the forensic sciences relating to their observations of the suspect’s DNA. This has frequently been based on their observations of the suspect’s allele lengths during 15 purportedly independent probes.

In the all too frequent special case where Φ is set equal to 0.5, the posterior probability of a perfect match is λ=R/(R+1). In the United Kingdom, R turns out to be a billion with remarkable regularity, in which case 1-λ, loosely speaking the posterior probability that the trace at the crime scene doesn’t come from the defendant’s DNA, is one in a billion and one, and this is usually regarded as overwhelming evidence against the defendant.

While this procedure is being continuously modified, it has frequently been, at least in the past, outrageously and unscientifically incorrect, for the following reasons:

1.If there is no prior evidence, then, according to Laplace’s Principle of Insufficient Reason, Φ should be set equal to 1/N, where N is the size of the population of suspects, for example the size, maybe around 25 million, of the population of eligible males in the United Kingdom.

In contrast, a prior probability of 0.5 is often introduced into the courtroom via Erik Essen-Möller’s shameless device [79] of a ‘random man’, which is just a mathematical trick, or by similarly fallacious arguments which have even been advocated by some leading proponents of the Bayesian paradigm. Essen-Möller’s formula was published in Vienna in 1938 around the time of the Nazi annexation of Austria. Maybe Hitler was the random man!

|

| Erik Essen-Möller |

Erik Essen-Möller later made various influential contributions to the ‘genetic study’ of psychiatry and psychology, include schizophrenia, and was much fêted in his field. Jesus wept!

2. The genocrats don’t represent R by a Bayes factor, but rather a likelihood ratio. In the case where 15 DNA probes are employed, the combined likelihood ratio R incorporates empirical estimates of 15 population distributions of allele lengths. These non-parametric empirical estimates were, during the 1990s, frequently derived from 15 small, non-random, samples of the allele lengths and can be highly statistically deficient in nature. See, for example, the excellent 1994 discussion paper and review by Kathryn Roeder in Statistical Sciences.

3. The overall, combined likelihood ratio R has typically been calculated by multiplying 15 individual likelihood ratios together. The multiplications would be open to some sort of justification if the empirical evidence from the 15 probes could be regarded as statistically independent. The genocrats and forensic scientists seek to justify statistical independence by the genetic independence which occurs in homogeneous populations which are in a state of Hardy-Weinberg equilibrium e.g when individuals choose their mates at random from all individuals of opposite gender in the population. However, most populations are highly heterogeneous and most people I know don’t choose their partners at random. For example, most heterosexual people choose heterosexual or bisexual partners.

An inappropriate homogeneity assumption can greatly inflate the overall likelihood ratio R as a purported measure of evidence against the defendant. For example, if the individual likelihood ratios are each equal to four, then R is four to the power fifteen, which exceeds a billion, in situations where the ‘true’ combined measure of evidence might be quite small.

During the trial (1995-6) of the iconic American football star O.J. Simpson for the murder of his long-suffering wife Nicole, the celebrated prosecution expert witness Professor Bruce Weir of the University of North Carolina attempted to introduce blood and DNA evidence into the Courtroom via a similarly misguided misapplication of Bayes Theorem. The scientific content of his evidence was refuted by our very own Aussie Bayesian, Professor Terry Speed of the University of California at Berkeley. Terry was assisted in his efforts by an arithmetic error made by Professor Weir during the presentation of the prosecution testimony. However, when Simpson was found innocent in criminal court, the media largely attributed this to the way a police officer had planted the forensic evidence by throwing it over a garden wall.

During the early 1990s, the defendant in the Denis Adams rape case was convicted on the basis of the high value of a purported likelihood ratio, even though the victim firmly stated that he wasn’t the man who’d raped her. Adams moreover appeared to have had a cast iron alibi, since several witnesses said that he was with them a many miles away at the time of the crime.

The defence expert witness, the much-respected Professor Peter Donnelly of the University of Oxford, asked the members of the jury to assess their prior probabilities by referring to the human evidence in the case, in a valiant attempt to counter the influence of the large purported combined likelihood ratio. In retrospect, Peter should have gone straight for the jugular by refuting the grossly inflated likelihood ratio along the applied statistical lines I describe above.

When the Court of Appeals later upheld Adams’ conviction, they strongly criticised the use of prior probabilities as a mode for assessing human evidence and effectively threw Bayes Theorem out of Court, in that particular case at least. Adrian Smith, at that time the President of the Royal Statistical Society, was not at all amused by that rude affront to his raison d’être.

For alternative viewpoints regarding the apparent misapplications of Bayes theorem in legal cases, see the well-cited books by Colin Aitken and Franco Taroni, and David Balding, and the 1997 Royal Statistical Society invited discussion paper by L.A. Foreman, Adrian Smith and Ian Evett.

Colin Aitken is Professor of Forensic Statistics at the University of Edinburgh. Jack Good’s earlier ‘justification’ of the widespread use of Bayes factors as measures of evidence is most clearly reported on page 247 of Colin and Franco’s book widely cited book Statistical Evaluation of Evidence for Forensic Scientists, and on page 389 of Good’s 1988 paper ‘The Interface between Statistics and Philosophy of Science’ in Statistical Science.

Jack Good invokes Themis, the ancient Greek goddess of justice, who was said to be holding a pair of scales on which she weighed opposing arguments. Colin Aitken is a leading advocate of the use of Bayes factors in criminal cases, and his officially documented recommendations to British Courts of Law depend heavily on Good’s key conclusion that any sensible additive weight of evidence must be the log of a Bayes factor. That creates visions of the Goddess Themis weighing the logs of Bayes factors on her scales and putting herself at loggerheads with the judge.

Maybe I’m missing something, though perhaps not. Good’s ‘justification’ seems to be rather circuitous, and indeed little more than a regurgitation of the additive property,

|

Posterior log-odds = Prior log-odds +log (Bayes factor),

|

which can of course be extended to justify the addition of the Bayes factors from successful experiments. This simple rearrangement of Bayes Theorem would, at first sight, appear to justify Good’s apparently seminal conclusion. However, since Bayes factors frequently possess counterintuitive properties (see Ch. 2), the entire idea of assigning of assigning a positive probability to a ‘sharp’, i.e. simple or only partly composite, null hypothesis, is open to serious question in situations where the alternative hypothesis is composite.

The approach to multivariate binary discrimination described by John Aitchison and Colin Aitken in their 1976 paper inBiometrika is much more convincing, as they use a non-parametric kernel method to empirically estimate the denominator in the Bayes factor. But a posterior probability cannot justifiably be associated with it, even as a limiting approximation.

Colin’s parametric Bayes factors are of course still useful if they are employed as test statistics, in which case they will always possess appealing frequency properties. See [15], pp 162-163. It’s when you try to use Bayes theorem to directly convert a Bayes factor into a posterior probability that there’s been trouble at Mill. The trouble could be averted by associating each of Colin’s Bayes factors with a Baskurt-Evans style Bayesian p-value. Perhaps Colin should guide the legal profession further, by writing another book on the subject.

In [2] and [3], Jack Good used Bayes factors as measures of evidence when constructing an ambiguously defined measure of explicativity, which is, loosely speaking, ‘the extent to which one proposition or event explains why another should be believed’. I again find Jack’s mathematical formulation of an interesting, though not all pervading, philosophical concept to be a bit too fanciful.

The co-authors of a 1997 R.S.S. invited paper on topics relating to the genetic evaluation of evidence included Dr. Ian Evett of the British Forensic Science Service and my nemesis Adrian Smith. They, however, chose not to substantively reply, in their formal response to the discussants, to the further searching questions which I included in my written contribution to the discussion of their paper.

These were inspired by my numerous experiences as a defence expert witness in U.S. Courts In 1992, I’d successfully challenged an alleged probability of paternity of 99.99994% in Phillips, Wisconsin, and district attorneys in the Mid-West used to settle paternity testing cases when they heard I was coming. The 1992 ‘Rosie and the ten construction workers case’, when I refuted a prior probability of paternity of 0.5 and a related posterior probability of over 99.99% in Decorah, Iowa, seemed to turn a Forensic Statistics conference in Edinburgh in 1996 head over heels, and Phil Dawid and Julia Mortera made lots of amusing jokes about it over dinner while Ian Evett and Bruce Weir fumed in the background. I, however, always declined to participate in the gruesome rape and murder cases in Chicago.

Nevertheless, during a subsequent light-hearted public talk on a different topic at the 1997 Science Festival in Edinburgh, Adrian somewhat petulantly singled me out as a person who disagreed with him. I’m glad that I had the temerity to do so.

Our leading statisticians, and forensic scientists, should be ultra-careful not to put the public or the remainder of their profession into a state of mystification. Jimmie Savage had a habit of putting down statisticians who questioned him e.g. his very sharp, though self-effacing, brother-in-law Frank Anscombe, who was an occasional Bayesian, and John Tukey is said to have put pressure on George Box to leave Princeton in 1960 for related reasons, after Tukey received some of the same medicine when he visited Ronald Fisher for afternoon tea. Holier-than-thou statisticians should realise that they are likely to be wrong some of the time, at least in concept, along with everybody else. As George Box once said, ‘We should always be prepared to forgive ourselves when we screw up.’

Some further problems inherent in the Bayesian evaluation of legal evidence are satirized in Ch. 14: Scottish Justice of my self-published novel In the Shadows of Calton Hill.

At the risk of provoking further negative reactions e.g from the genetics and forensic science professions, here are some possible suggestions for resolving the immensely socially damaging DNA evidence situation:

1. Since most of the genetic theory that underpin them is both suspect and subject to special assumption, combined likelihood ratios and misapplications of Bayes theorem should be abandoned altogether. In the case where there are fifteen DNA probes, an exploratory data analysis should be performed of the 15 corresponding (typically non-independent) samples of the allele lengths or their logs, and used to contrast the 15x1 vector X of log allele lengths measured from the trace at the crime scene with the vector Y of the log allele lengths measured from the suspect’s DNA. The quality of the data collection would need to be greatly improved to justify doing this.

2. A Bayesian analysis of the n 15x1 vectors of the log-allele lengths should then be employed to obtain a posterior predictive distribution for the vector Z of the 15 log-allele lengths for a randomly chosen individual from the reference population. Various predictive probabilities may then be used to contrast the elements of X and Y, and some criterion would need to be decided upon by the Courts to thereby judge whether X and Y are close enough to indicate a convincing enough match.

3. As an initial suggestion, it might be reasonable to assume that the n vectors of log-allele lengths constitute a random sample from a multivariate normal distribution with unknown and unconstrained mean vector μ and covariance matrix C. If the prior distribution of μ and C is taken to belong to the conjugate multivariate normal/ inverted Wishart family (e.g. [15], p290), then the posterior predictive distribution of Z is generalised multivariate-t. This facilitates technically feasible inferential Bayesian calculations for contrasting the elements of X and Y, and prior information about μ and C can be incorporated if available e.g. by reference to other samples.

In the meantime, thousands of potentially innocent people are still being falsely convicted using versions of Bayes Theorem. This was easily the most terrifying misapplication of the Bayesian paradigm of the twentieth century, and it is something which the Bayesian profession should not be proud of.

[In early January 2014, the Bayesian forensics expert Professor David Balding of University College London kindly advised me that many of the statistical problems inherent in the evaluation of DNA evidence are now in the process of being overcome. See, for example David’s paper ‘Statistical Evaluation of Forensic DNA Profile Evidence’ (with Christopher Steele, Ann. Rev. Stat. Appl., 2014)]

|

No comments:

Post a Comment